Can use FreeBSD/Linux utilities directly on the server and over the network can use OS X (but working with 600GB over the LAN not the fastest way). I'm able make complex scripts is BASH and "+-" :) know perl.

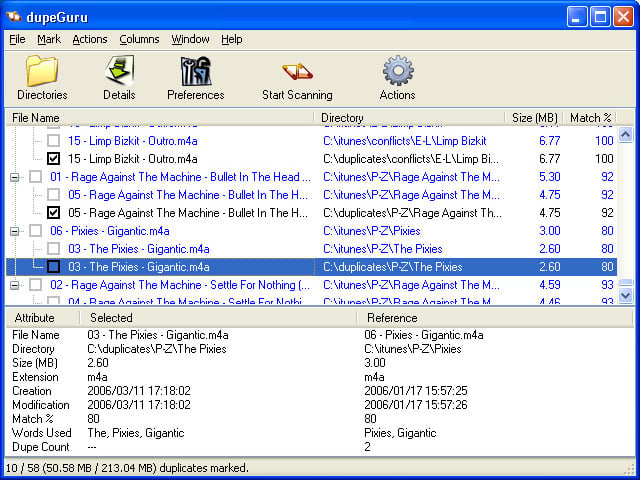

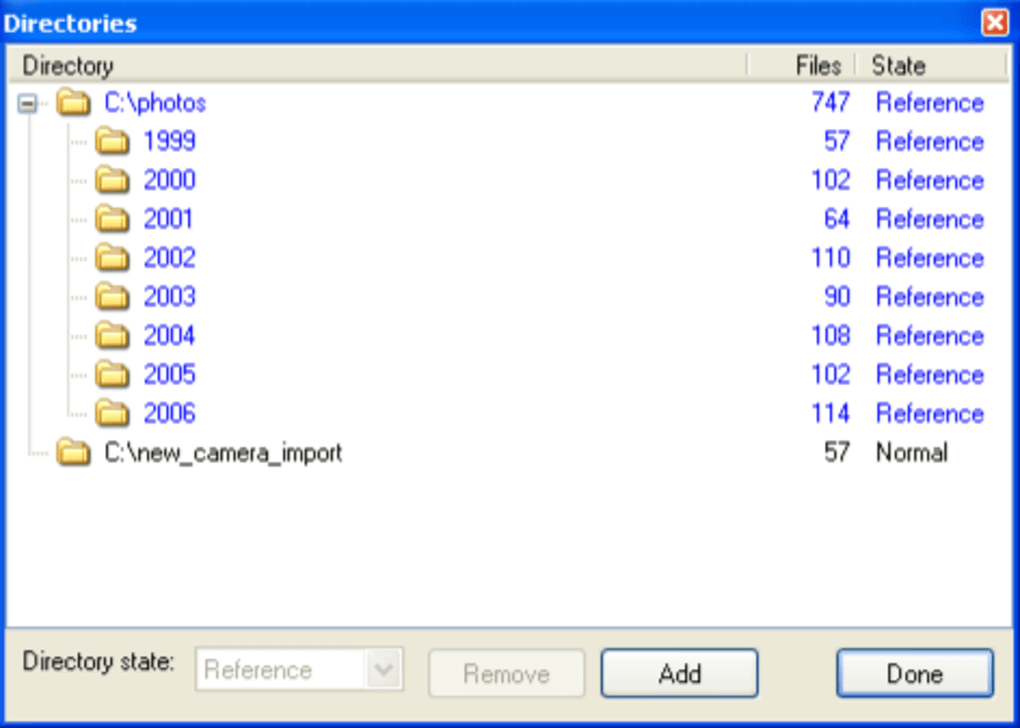

(therefore file checksuming doesn't works, but image checksuming could.). how to find duplicates withg checksuming only the "pure image bytes" in a JPG without exif/IPTC and like meta informations? So, want filter out the photo-duplicates, what are different only with exif tags, but the image is the same.or they are the "enhanced" versions of originals, etc.or they are only a resized versions of the original image.photos what are different only with exif/iptc data added by some photo management software, but the image is the same (or at least "looks as same" and have the same dimensions).This helped a lot, but here are still MANY MANY duplicates: collected duplicated images (same size + same md5 = duplicate).searched the the tree for the same size files (fast) and make md5 checksum for those.Photos comes from family computers, from several partial backups to different external USB HDDs, reconstructed images from disk disasters, from different photo manipulation softwares (iPhoto, Picassa, HP and many others :( ) in several deep subdirectories - shortly = TERRIBLE MESS with many duplicates.

Having approximately 600GB of photos collected over 13 years - now stored on freebsd zfs/server.

0 kommentar(er)

0 kommentar(er)